Sidebar

Table of Contents

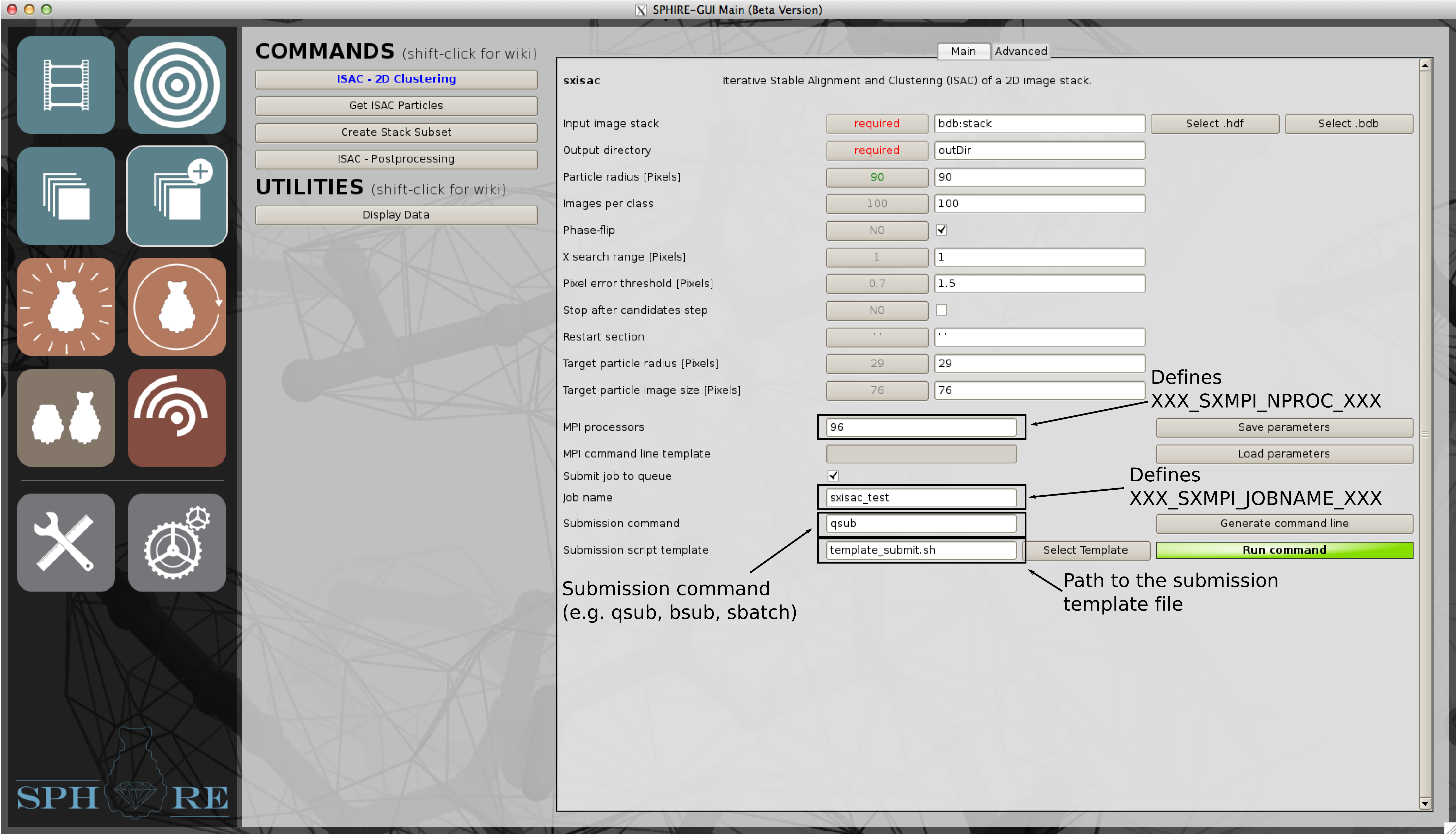

Submit a cluster job from the GUI

Most SPHIRE jobs require heavy computing and are most suited for a cluster environment, although all SPHIRE pipeline commands now support a workstation (or a single node system) since Beta Patch 1 release (sphire_beta_20170602).

Since every cluster environment is a little bit different and everybody has a particular preference for job schedulers we cannot provide support for all the possible cluster configurations. Nevertheless, SPHIRE's GUI can create a submission script for you, given that you provide simple template script. Currently, these are the variables than can be parsed by the GUI:

| Variable | Description | ||

| XXX_SXMPI_NPROC_XXX | Defines the total number of cores to be used. | ||

| XXX_SXMPI_JOB_NAME_XXX | Defines the name of the job. This can be used to name the stdout and stderr and will define the name of the submission script created. | ||

| XXX_SXCMD_LINE_XXX | This will be replaced by the actual command to run SPHIRE. |

For instance, if you want to submit a 2D clustering job (ISAC) from the GUI, you should configure the MPI section of like below:

This will create a file called qsub_sxisac_test.sh with the correct setup and submit it to the cluster.

This variables can be set in the environment to adjust the default values for the job submission text fields.

| Variable | Description | ||

| SPHIRE_MPI_COMMAND_LINE_TEMPLATE | Command line template | ||

| SPHIRE_SUBMISSION_COMMAND | Submission command | ||

| SPHIRE_SUBMISSION_SCRIPT_TEMPLATE | Submission script template | ||

| SPHIRE_SUBMISSION_SCRIPT_TEMPLATE_FOLDER | Folder containing different submission script templates | ||

| SPHIRE_NPROC | Default number of processors | ||

| SPHIRE_ENABLE_QSUB | Use cluster submission by default |

Example Scripts

Please contact your system administrator in order to create an appropriate template in case your cluster uses a different job scheduler.

Example scripts can be downloaded here: QSUB EXAMPLES.

Son of Grid Engine (SGE)

Below you can find one example of the kind of submission templates that we would use in our local cluster.

#!/bin/bash

set echo on

#$ -pe mpi_fillup XXX_SXMPI_NPROC_XXX

#$ -N XXX_SXMPI_JOB_NAME_XXX

#$ -cwd

# Sets up the environment for SPHIRE.

source /work/software/Sphire/EMAN2/eman2.bashrc

MPIRUN=$(which mpirun)

#Creates a file with the nodes used for the calculation. It can be given to mpirun, but

#with the current setup it is not necessary.

set hosts = `cat $PE_HOSTFILE | cut -f 1 -d . | sort | fmt -w 30 | sed 's/ /,/g'`

cat $PE_HOSTFILE | awk '{print $1}' > hostfile.$JOB_ID

${MPIRUN} -np XXX_SXMPI_NPROC_XXX XXX_SXCMD_LINE_XXX

If you use this script and define the variable XXX_SXMPI_JOB_NAME_XXX as above (sxisac_test), then the stderr and stdout will be written to files called sxisac_test.e and sxisac_test.o respectively.

PBS/Torque

Below you can find one example of the kind of submission templates that we would be used for PBS/Torque queueing system.

#!/bin/csh

#PBS -N XXX_SXMPI_JOB_NAME_XXX

#PBS -l nodes=8:ppn=23

module load sphire

cd $PBS_O_WORKDIR

MPIRUN=$(which mpirun)

${MPIRUN} -np XXX_SXMPI_NPROC_XXX --hostfile $PBS_NODEFILE XXX_SXCMD_LINE_XXX

In this case, the user would provide 184 CPU in the GUI.

8 * 23 = 184

Slurm

Below you can find one example of the kind of submission templates that would be used for Slurm queueing system. On our system, we had issues with “the mpirun –map-by node” option, so we recommend to not use for now.

#!/bin/zsh #SBATCH --partition all #SBATCH --time 7-00:00 #SBATCH --ntasks XXX_SXMPI_NPROC_XXX #SBATCH --output XXX_SXMPI_JOB_NAME_XXX.log #SBATCH --job-name XXX_SXMPI_JOB_NAME_XXX module load sphire mpirun -n XXX_SXMPI_NPROC_XXX XXX_SXCMD_LINE_XXX